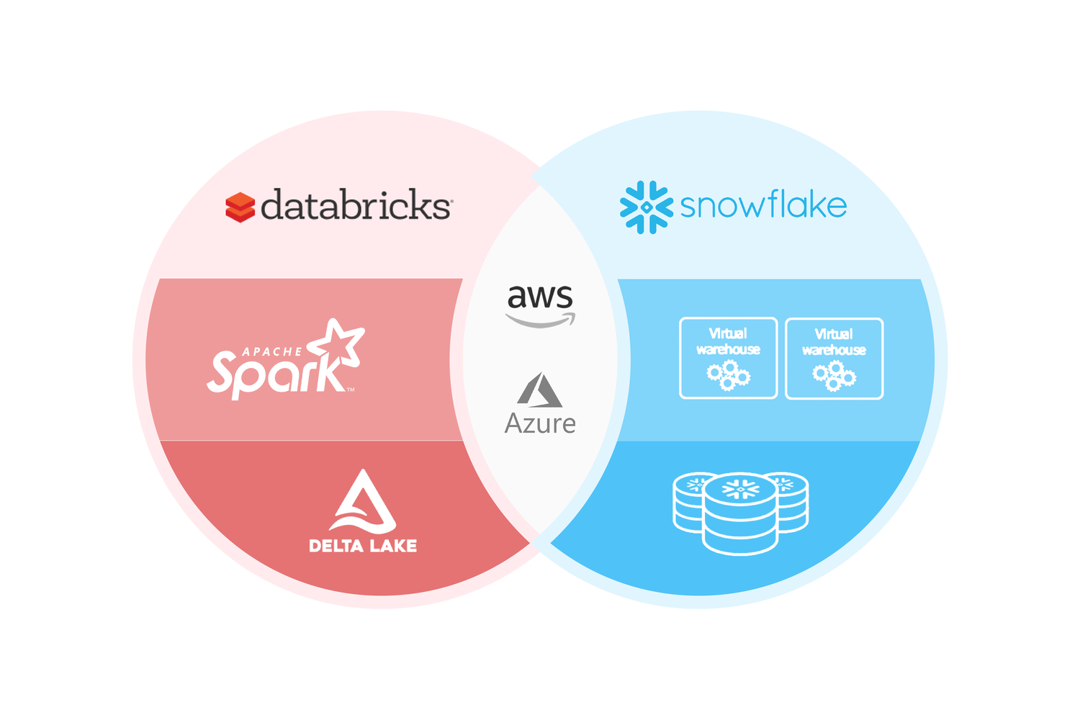

Databricks vs Snowflake. Which is best for Data Analysis ?

What is Snowflake ?

Snowflake is a comprehensive, fully-managed software as a service (SaaS) platform that offers a unified solution for a variety of data-related tasks such as data warehousing, data lakes, data engineering, data science, and data application development, as well as secure sharing and consumption of real-time or shared data. It provides a range of out-of-the-box features like separation of storage and computes, on-the-fly scalable computing, data sharing, data cloning, and third-party tool support to meet the diverse needs of growing enterprises

Snowflake is a self-managed service which means:

- No virtual or physical hardware to select, install, configure, or manage.

- No software to install.

- Snowflake handles maintenance, scale-up/scale-down, and tuning.

Snowflake runs completely on cloud infrastructure and uses virtual compute instances for its compute needs and storage service for persistent storage of data.

Advantages

- Significant investment in an ecosystem rich with partnerships and integrations for ongoing extensibility potential.

- The fixed pricing model for predictable costs.

- Simplified administration tasks.

- It is a good Data Warehouse.

Disadvantages

- It does not provide direct support for all AI/Ml use cases and using third-party apps to augment it can decrease the ease of configuration and management of the required functionality.

- Snowflake is not best suited for streaming and real-time use cases.

- Out-of-the-box administration functionality cannot always be modified or fine-tuned for specific needs.

- Performance issues may arise when dealing with large data volumes.

- Snowflake has Proprietary technology and does not support Open source-based technology. Once the customer chooses it, he is locked into the snowflake Proprietary technology without much flexibility.

- Snowflakes incur heavy costs for processing the data.

What is Databricks?

Databricks is a cloud-based platform that helps you to process, transform and make available huge amounts of data to multiple user personas for many use cases, including BI, Data Warehousing, Data Engineering, Data Streaming, Data Science, and ML. It is also a one-stop product for all data requirements such as storage and analysis.

People use Databricks to process, store, clean, share, analyze, model, and monetize the database with solutions from BI to machine learning. Also, Use the Databricks platform to build and deploy data engineering workflows, analytics dashboards, and more.

Advantages

- Databricks is built on top of an open-source Apache spark framework so no vendor lock-in.

- Databricks allow for the analysis of structured, unstructured, and semi-structured data; it works with batch or streaming use cases.

- The original Lakehouse platform delivers the best of both Data Lakes and Data Warehouses. Otherwise, you need to invest in two different platforms.

- Databricks has support for advanced AI capabilities including machine learning, Data science, and serverless model serving. Databricks supports serving the model via Kubernetes or other model-serving platforms as well.

Disadvantages

- Databricks require a certain level of technical expertise and familiarity with Spark and other big data tools even though Databricks supports ANSI-based SQL which is used widely in the industry.

- You need back up your data files or use Databricks Repos to save them in a project folder.

Databricks vs Snowflake. Which is best for Data Analysis?

1.AI/ML and Data Science

ML Training Options

Databricks

- 1. Built-in Model serving capabilities with MLflow integration for MLOps services.

2. Single and multi-node options for training.

3. Supports SparkML or Horovod versions of learning libraries. - Full integration with MLflow for end-to-end machine learning lifecycle management.

- Built-in tools and libraries for data preprocessing, feature engineering, model training, and evaluation.

- Built-in support for deep learning workloads with tools and libraries such as TensorFlow and Keras.

Snowflake

- 1. Two methods for training:

a. Snowflake computing is limited to a single node.

b.Third-party computing using platforms such as Sagemaker, Dataiku, Databricks2.MLOps services must be found externally. - Limited integration with MLflow.

- Requires use of third-party tools and libraries for ML, AI and deep learning.

ML Serving Options

Databricks

- Natively integrated MLflow for model serving.

- Supports batch inference (UDF to wrap your model prediction into your ETL pipelines), and serverless API endpoint configurations for realtime serving.

Snowflake

- Two methods for model serving: In-Platform Serving and Model API

a. In-Platform Serving supports loading models from the Snowflake staging area or external model registry.

b. Model API supports hosting models in inference clusters outside of the Snowflake environment.

2. Snowpark UDFs can be written to call the trained model's API and send data for inference

Compute environment

Databricks

Multi-node by default.

Snowflake

Single-node recommended, external compute required for multi-node workloads and workloads requiring specific libraries.

2. Use Case support

Data Processing

Databricks

Databricks is built on top of Apache Spark, which provides a powerful engine for data processing workloads. Databricks can handle large-scale data processing tasks, including ETL, data cleaning, and data transformation.

Snowflake

Snowflake also provides support for data processing workloads, but its focus is primarily on data warehousing and analytics. Snowflake's cloud-based architecture provides a highly scalable solution for processing large volumes of data.

Data Engineering

Databricks provides support for data engineering workloads, including building data pipelines and managing data workflows. Databricks makes it easy to build scalable data engineering solutions using Apache Spark.

Snowflake

Snowflake provides a fully-managed solution for data warehousing and data engineering workloads. Snowflake's architecture provides a scalable solution for building data pipelines and managing data workflows.

Machine Learning

Databricks

Databricks is designed for machine learning workloads and provides a collaborative workspace for data scientists to build and deploy machine learning models. Databricks provides built-in support for machine learning frameworks like TensorFlow, PyTorch, and Scikit-learn.

Snowflake

Snowflake does not provide built-in support for machine learning, but it can be used in conjunction with machine learning platforms like Databricks or SageMaker. Snowflake's data warehousing capabilities make it easy to store and analyze large volumes of data for use in machine learning models.

Data Warehousing

Databricks

While Databricks can be used for data warehousing, it is primarily designed for data processing and machine learning workloads.

Snowflake

Snowflake provides a fully-managed solution for data warehousing and analytics. Its cloud-based architecture provides a highly scalable solution for storing and analyzing large volumes of data.

Real-time Analytics

Databricks

Databricks provides support for real-time analytics using Apache Spark's streaming capabilities.

Snowflake

Snowflake does not provide built-in support for real-time analytics, but it can be used in conjunction with real-time streaming platforms like Kafka or

Kinesis.

Collaboration

Databricks

Databricks provides a collaborative workspace for teams to work together on data processing and machine learning projects. It includes features like shared notebooks, version control, and project management.

Snowflake

Snowflake does not provide built-in collaboration features, but it can be used in conjunction with collaboration platforms like Slack or Jira.